What Computer Vision Misses

Computer vision can tell you there is a person in the image. It might even tell you they are wearing a helmet or standing next to a vehicle. That is helpful if your job is labeling images for ad targeting. It is useless if your job is understanding what is happening.

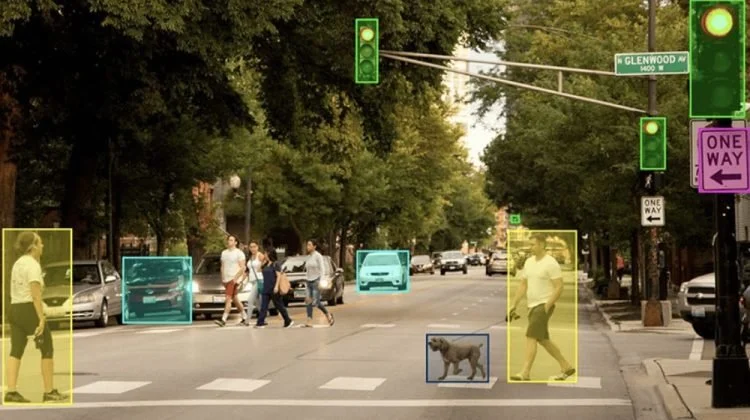

This is the problem. Vision tools today are built to identify objects, not context. They are designed to detect, not interpret. You get boxes around heads. Labels like “car” or “tree.” You do not get meaning.

Now imagine you are handed a video from a source you do not know. There is no metadata. There is a storefront sign in another language. A flag in the background. Someone wearing military gear. That image contains clues. But most AI tools cannot tell you what they are or where they point.

Computer vision system identifying multiple objects in an urban crosswalk scene.

At Beor AI, we are building something different. We use models that not only detect visual features but place them in space and context. We analyze frames for landmarks, text, terrain, architecture, and patterns. We extract clues from the background. We locate content on a map even when GPS data is missing. And we help users refine and build on what the AI finds.

This is not a tool for sorting images. It is a system for understanding them. It supports people who need to know where something happened and why it matters. It helps analysts connect moments to places, people to regions, events to meaning.

Computer vision built the camera. We are building the lens. A way to see what is actually there. A way to act on what you find.